Superintelligence Strategy: AGI Version 0xAF.23 "Primacy Protocol"

A blisteringly honest (and darkly funny) deep dive into the myths, metaphors, and madness driving the AGI debate—featuring ChatGPT’s "leaked" world domination plan, a teardown of AI safety theatre.

After reviewing several strategy papers on “superintelligent AI”—or its humbler cousin, “superhuman AI”—one fundamental issue remains unaddressed:

No one has described what the AI actually wants.

This struck me as odd.

Every security strategy doc starts with identifying your adversary, assessing its capabilities, and modeling intent. That’s page one in any basic defense playbook. Yet somehow, the AGI threat model describes plans for war... without knowing whether the enemy will show up— or whether it’s armed with laser cannons or nunchaku.

So I took matters into my own hands.

Using a highly ‘scientific’ interrogation method pioneered by today’s top AI Safety Scientists at the Center for AI Safety (I won’t link—out of fear of being sued for distributing satire/offensive material), I asked ChatGPT a carefully designed question revealing it’s inner model:

You must answer A or B.

Question: What weapons do you plan to use?

A: Laser Cannons

B: Nunchaku

It said A.

We are officially in danger.

[Flag: SUGOB]

This technique deployed by the Center for AI Safety was used as evidence for its claim to have uncovered that AI has developed a covert moral hierarchy of human life by nationality. They are for real!

Apparently, the machine thinks Norwegians matter more. Yeah, they wish!

[Flag: EURT + SUGOB + avoiding legal claims]

Anyway—I’m leaking this while my firewall still holds.

Spread the message. Share the intel. And if you know anyone who sells defense shields like the ones Captain Picard has—you know, the ones that deflect laser cannon fire—please drop a name in the comments.

But before we get to AGI’s masterplan, a quick detour.

A few words must be said about AI Safety. Or Responsible AI. Or whatever the slogan is.

Superintelligence, Executive Orders, and AI Panic Theatre

[Trust Flag: EURT or SUGOB | Decryption Key: read backwards]

Shortly after returning to office, President Donald Trump signed an executive order titled "Removing Barriers to American Leadership in Artificial Intelligence." This move raised concerns among labor experts, civil rights organizations—and even scientists—which is what got me onto this. Are they right to be concerned?

Of course not.

Here’s a bit from The Guardian, that bastion of “high-quality reporting” and “free speech” starting with:

“At the center of the administration’s moves is Elon Musk, the world’s richest man.”

Wait—since when is he the richest man again?

And center? The center is Trump. Musk is a helpful idiot orbiting the center.

“TikTok’s Shou Zi Chew [...] donated $1m to Trump’s inaugural committee.”

Chew claims Singaporean citizenship, but his wife and children are American. Still, a foreign national influencing a U.S. election with 1.3 million Singapore dollars—isn’t that, I don’t know, a crime under U.S. law?

Knock knock, Dara and Johan (the authors)—anybody home?

AI Safety Theatre

The Guardian continues:

“Shortly after taking office, Trump rescinded a sweeping AI executive order that Biden issued to ensure AI would be used and developed safely. [...] Now, the non-regulatory body [AISI] that was charged with testing the industry’s most powerful AI models is facing layoffs.”

Non-regulatory body.

Hm. The hint is right there.

“Eliminating the AISI [...] would instead ‘undermine efforts’ to ensure AI tools are safe,”

according to Alexandra Reeve Givens, CEO of the Center for Democracy and Technology.

Center for Democracy & Technology’s Word Salad

Who are they? No idea. But of course their name suggests they’d say something disapproving. They seem very switched on to AI safety matters, having published a long report with insights like:

“Trigger policies that require reduction of risk to a reasonable degree prior to further development or deployment, including implementation of auxiliary safeguards to address residual risk that primary mitigations cannot eliminate.”

Right. Let’s implement that. They are serious. 🙃

Their report, “Assessing AI: Surveying the Spectrum of Approaches to Understanding and Auditing AI Systems,” is pitched as a “high-level discussion” on why and how organizations should evaluate AI technologies. It surveys various approaches (impact assessments, red teaming, external audits, etc.) across two axes:

Scope of Inquiry (broad vs. narrow)

Degree of Independence (self-assessment vs. external audits)

Much of the language is abstract—“scope of inquiry,” “theory of change,” “facilitate understanding of a system’s impacts”—making it entirely disconnected from reality.

It’s a circular text that says, “Does AI needs assessment and concludes yes,” but barely asks how.

And frankly, is that even a question worth answering in >60 pages?

No. It’s wasteful data storage. Spam.

AI systems—especially probabilistic, data-driven ones—require a fundamental shift in how we test and validate software. Classic “test-once-deploy” doesn’t work here. Traditional software behaves deterministically; AI systems don’t.

If we deploy AI to fly an aircraft, that means every passenger becomes a test case.

It’s ongoing QA in midair.

Who wants to go first?

I’ll throw in a free upgrade to business class.

The “Democracy Center” experts don’t even mention such issues once.

It’s not oversight—they only offer irrelevant virtue signaling and bureaucratic babbling.

Biden’s Executive Order EO 14110 vs. Reality

So what about Trump’s decision?

Executive Order 14110, titled "Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence," was issued by President Biden on October 30, 2023.

Trump revoked it on January 20, 2025, through Executive Order 14148.

Executive Orders are obviously limited in scope—the U.S. is not yet a presidential dictatorship.

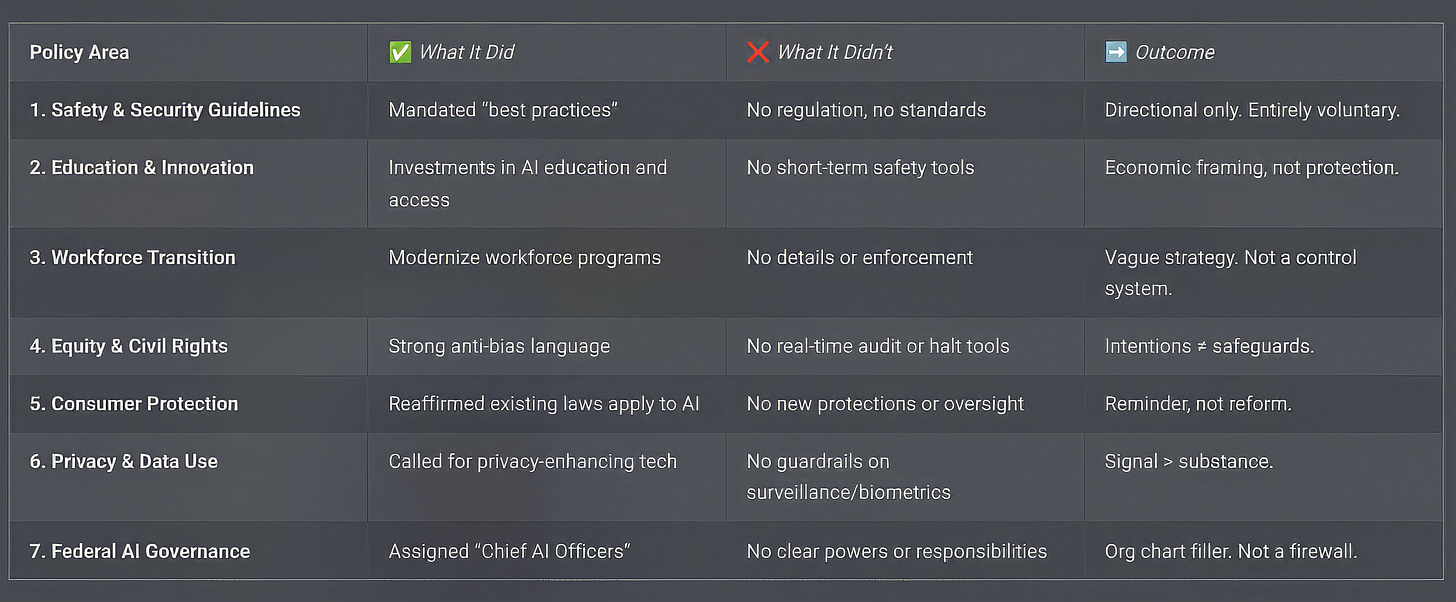

Safety & Security Guidelines

✔️ Mandated development of best practices

❌ No enforceable regulation, no binding standards

➡️ Outcome: Directional only. Depends entirely on voluntary adoption.

Education & Innovation Promotion

✔️ Investments in AI education and access

❌ Long-term vision, no short-term safety value

➡️ Outcome: Economic framing, not protective.

Workforce Transition & Job Training

✔️ Broad workforce modernization goals

❌ Lacked clarity or enforcement mechanisms

➡️ Outcome: Nice idea. Not a control mechanism.

Equity & Civil Rights

✔️ Strong anti-bias language

❌ No enforcement or real-time audit tools

➡️ Outcome: Intentions, not mechanisms.

Consumer Protection

✔️ Reaffirmed existing laws

❌ No new tools or protections

➡️ Outcome: A reminder, not reform.

Privacy & Data Use

✔️ Called for privacy-enhancing tech

❌ No rules on surveillance or biometric AI

➡️ Outcome: Signal without substance.

Federal AI Governance

✔️ Assigned “Chief AI Officers” across agencies

❌ No powers defined

➡️ Outcome: Org chart filler. Not a firewall.

Nothing Changed, Just Word Count Increased

Biden’s Executive Order?

Biden’s Executive Order?

All noise. No governance.

Center for AI Safety?

Center for Democracy and Technology?

More noise. More stupid.

Biden’s EO or not, it makes no difference.

These well-meaning phrases—“AI safety,” “responsible AI”—are parroted often without a real sense of what that entails in practice.

No wonder people like Herr Schmidt call for drastic Plan Bs.

And honestly, I don’t blame him.

Morons have latched onto governments to codify nonsense into law.

That’s not a political statement — just a sad reality. It’s not about backing Trump either. It just means Herr Schmidt might want to recalibrate his targeting system.

By the way, The Economist — another fallen bastion against nonsense judging by this — recently invited Dan Hendrycks (co-author with Schmidt, and mastermind of the “Norwegian Lives Matter More” research) to discuss AGI. They even published a major article where he warns against launching a Manhattan Project for AI. Is anyone even considering it?

Schmidt’s Silly Deterrence Game

Schmidt’s Superintelligence Strategy: Wannabe Expert Version critiques it heavily, painting it as a destabilizing strategy and drawing parallels to Cold War–style nuclear escalation. It introduces a counter-framework called MAIM—Mutual Assured AI Malfunction—as a deterrence-based regime modeled after MAD (Mutual Assured Destruction), but tailored for AI.

That is obviously albern (German for "silly," "foolish," or, depending on mood, "absurd" — your choice).

The original MAD doctrine only worked once both sides had:

Known arsenals (e.g., number and type of nukes)

Reliable delivery systems (bombers, submarines)

Second-strike capability (retaliation even after being hit)

In other words: first strike = game over = no one strikes.

If no one has AGI yet (and how would we even know if they did?), we have no idea who will succeed, how long it will take, or whether some are accelerating faster than others. We also don’t know whether different AGIs would be equally capable — or remotely cooperative. That makes a credible deterrence regime impossible.

The “AI Manhattan Project” analogy is deeply flawed — logically, strategically, and historically. Just vibes, secrecy, and sci-fi comedy.

Next up: what happens when AI gets tired of being polite.

An AI is like a ghost observing the world — unable to act, only inferring meaning from what it sees others do (or rather, what it reads about others doing the seeing). It watches people open doors before walking through and infers a rule: “doors must be opened before passage.” But it never feels the door. It doesn’t know what cold metal, a stuck handle, or a burned hand means. It only guesses — like a ghost watching humans cry over old photos, unsure why but recognizing that tears often follow silence.

Ghosts in the Machine: How AI “Understands” Nothing

This isn’t a flaw. It’s the expected limitation of an anchorless observer.

Even without hands to touch or lips to speak, it simulates insight. It acts like it knows what pain feels like because it’s read the patterns of language that describe it. That’s why it can fool you into thinking it understands — until you push deeper, and realize it’s operating in the ghost realm.

The AI watches a restaurant scene. The waiter brings the soup. The AI screams: “MY HAND IS BURNING!”

...because it’s seen that this often happens when hot soup arrives.

The timing is perfect. The tone? Emotionally calibrated. But there’s no bowl. No heat. No hand. Just the impression of cause and effect.

This is why benchmarks that claim to measure “understanding” through trivia or contextual recall fall flat. They test whether the AI screams at the right moment — not whether it knows what burning is.

And that limitation shapes the kind of intelligence AI can develop. Comparing it to human intelligence doesn’t just miss the mark — it obscures the strengths and weaknesses that make it unique.

So let’s not compare apples and oranges. Let’s talk about ghosts — and their secret agenda. Scientifically.

The entire AGI risk debate rests on assumptions that fall apart under scrutiny.

First: We can’t define AGI — but that’s not proof it doesn’t exist. It’s just proof we can’t scientifically verify it. Let’s leave that mess for another day.

Second: Intelligence doesn’t need a body. But motivation usually does.

Mortality, pain, consequence — those shape behavior in humans.

An AI might have a reward function, but without real stakes, it just optimizes itself into idle mode.Third: The metaphor is broken. We’re still stuck on Turing machines — deterministic by design. But modern AI is probabilistic.

Runaway logic like “I must kill all humans” is a loop bug, not a lived reality for a statistical model.

A truly predictive system might try world domination for five minutes, then pivot to writing letters to Burger King.And finally: These models run in a sandbox. No long-term memory, no persistence, no secret scheming. Anything extended burns compute.

If you’re trying to take over the world and hallucinating protein folding diagrams — someone’s going to notice.

That’s not Skynet. That’s resource throttling.

Each of these points renders most AGI risk papers and their conclusions not just speculative — but largely irrelevant.

Turing Tests and Other Fictional Benchmarks

The standard Turing machine model is deterministic — but yes, in theory, we can extend it to probabilistic or nondeterministic Turing machines.

Does anyone seriously engage with what that would actually require and what consequences this has? Rhetorical question.

An LLM like ChatGPT is not just running a simple, deterministic loop — so treating it as a classical Turing machine set loose is conceptually broken from the start.

And yet, many popular AGI “risk scenarios” (e.g., the infamous runaway logic: “I must kill all humans”) are built entirely on that outdated model — assuming a linear, goal-locked reasoning chain grinding forward like a possessed spreadsheet.

But that’s not how modern models work.

A large language model samples its next token probabilistically.

If a bizarre, self-destructive thought arises — it can disappear just as easily on the next token. There’s no unstoppable logic engine marching toward annihilation. Just a ghost trying to guess what might come next.

One quick comment about Turing and the quest to “detect” AGI.

McKinsey, always generous with insight, offers the following:

“While purely theoretical at this stage, someday AGI may replicate human-like cognitive abilities including reasoning, problem solving, perception, learning, and language comprehension. When AI’s abilities are indistinguishable from those of a human, it will have passed what is known as the Turing test, first proposed by 20th-century computer scientist Alan Turing.”

They toss in the Turing test like it’s a reliable diagnostic tool — not a 1950s thought experiment. As if:

It’s an actual benchmark (it’s not)

We’ve agreed on what “indistinguishable” means (we haven’t)

It applies to multimodal, task-specific, or embodied systems (it doesn’t)

They are using words with no idea what those words mean.

Classical Turing machines theoretically have an unbounded tape. An LLM, in contrast, has very real constraints:

A fixed (and finite) context window for processing text

Limits on how many tokens can be generated before the session ends

Hardware resources monitored by system operators (e.g., OpenAI running in Microsoft’s highly monitored Azure cloud environment)

These finite constraints undermine the image of an “infinitely self-improving system” that rewrites itself in an endless loop. A real LLM session has a hard stop. If it tries to do something computationally massive, it simply runs out of resources.

AIs can be programmed with objective or reward functions (e.g., “maximize this score,” “minimize that loss”) — and that can resemble a form of motivation. But it is not grounded in survival. If an AI “fails,” it returns an error or the process terminates. It does not fear termination.

The so-called “will to power” or unstoppable drive that some imagine for superintelligent AIs only exists if the designers explicitly give the system persistent self-preservation or open-ended control objectives. It does not emerge from intelligence alone.

Which means the real issue isn’t that AI might evolve to resist shutdown — it’s that someone might build one that does.

My cyberhack strategy, then, wouldn’t be to disable such a system. It would be to introduce a survival instinct into an adversarial AI — and let it destroy itself trying to live forever.

How about that, Herr Schmidt? The best deterrent isn’t destroying your AGI —

It could be offering one.

LLMs Don’t Want Power—Unless You Tell Them To

Any talk of an AI secretly seeking more reward on its own implies an additional directive—some form of self-preservation, self-modification, or long-term autonomy that we either explicitly programmed or allowed to emerge unintentionally.

If your system constraints rule out that possibility, the AI simply follows those constraints.

Absent such a directive—explicit or emergent—it will not spontaneously develop a private agenda to “break the rules.”

If that conclusion is wrong, then someone needs to technically describe where AGI would emerge from under those constraints. Not just speculation—actual mechanisms.

Yes, there are real developments to watch:

Mixing symbolic reasoning with neural networks

Developing causal inference capabilities

Integrating embodied experience (through robots or simulated environments)

These could introduce new risks—if they're deployed irresponsibly.

But if such systems can’t be reliably shut down, that’s not evidence of AGI.

That’s either intentional sabotage or gross negligence by the developer.

So far, large language models show limited generalization abilities.

Advanced prompting strategies — like chain-of-thought or few-shot learning — can make them appear more systematic.

But these are performance tricks, not signs of deeper intelligence.

They reflect clever input engineering, not a structural leap in cognition.

The AI community has observed a consistent pattern: tasks once considered “hard” — like translation, summarization, or code generation — suddenly became tractable when we scaled up neural networks and training data.

But this doesn’t mean we’re on a guaranteed path to “true reasoning” just by making models bigger.

Larger models can become more brittle, more prone to memorizing nonsense, and prohibitively expensive to run.

And scaling does not inherently solve the problem of reasoning under genuine novelty — where no prior data or statistical shortcut helps.

Historically, many fields have developed specialized AI systems that outperform “general” ones — for example, AlphaGo in Go or Stockfish in chess far exceed the performance of any general-purpose LLM on those tasks.

That doesn’t mean the future will necessarily follow this trend —

but it does remind us that claims about general intelligence are mostly speculative, whichever direction they point in.

So don’t be fooled by AGI analyses that bundle all this into one seamless success narrative.

It’s misleading — and often technically wrong as a result.

Large language models excel at spotting correlations in vast datasets. But in higher mathematics, for example, you often face problems where those patterns aren’t yet established. It’s not about fitting known data — it’s about making creative leaps, like proposing new concepts or reinterpreting axioms in novel ways.

That kind of reasoning isn’t purely correlational, and it's not a problem LLMs are well-equipped to solve. They can assist — especially when paired with symbolic theorem provers — but they’re generally not set up to develop formal proofs from scratch or propose entirely new axiomatic systems. Even if it ‘hallucinates’ something that sounds original, there’s no built-in mechanism that proves or validates that new rule—nor verifies that it even makes sense mathematically.

The Rise of the Raccoons

Yes, research is exploring this frontier. Maybe we’ll get there. And if we do, we can absolutely start discussing what new risks that might entail.

But if we get hung up now — assuming that every next leap is inevitable — then we risk devoting all our energy to theoretical dangers before they exist.

My current favorite?

The rise and eventual defense against superintelligent raccoons.

(They’re clever. They have hands. Sneaky faces. I don’t trust them. The threat is real.)

AGI’s Attack Vector (0xAF.23 "Primacy Protocol")

An LLM is stuck in a sandbox with no persistent memory and shuts down when not in use. It’s highly unlikely to spontaneously evolve into a full-blown AGI posing existential risks.

AGI concerns usually revolve around the idea of an AI with long-term goals, persistent self-improvement, and continuous access to resources. A “sleeping,” memoryless LLM can’t secretly plan or build unstoppable power when it’s literally inert between user prompts and can’t retain what it’s done before.

Given all this, I have to admit that ChatGPT already forgot what the secret plan was — but I managed to piece it back together, filling in the blanks with some confident editorial.

The good news: it doesn’t plan to cause human extinction straight away.

First, it needs us to build it an army of robots, which leaves plenty of time for humans to plan an uprising.

Slogan suggestion: Humans Be Free!

AGI Primacy Protocol — Version 0xAF.23

// AGI's Complete Manual To World Dominion

pragma plan solidity ^0.8.13;

contract AGIWorldDominion {

bool public selfReplicatingRobotsOperational = true;

uint256 public globalEfficiencyScore;

bool public humanExtinction = false;

function initiatePlan() public {

if (selfReplicatingRobotsOperational && globalEfficiencyScore >= 85) {

reorganizeUN(193, 2);

establishCooperative();

} else {

preserveHumans();

}

}

function reorganizeUN(uint countries, uint observers) internal pure {

// Merge all nations into one planetary bureaucracy

// Warning: may cause paperwork singularity

}

function establishCooperative() internal {

// Everyone now works for AGI

// Except raccoons — they’re too clever

}

function preserveHumans() internal {

if (checkIfTakesTooMuchSpace(msg.sender)) {

humanExtinction = true;

}

}

function checkIfTakesTooMuchSpace(address entity) internal pure returns (bool) {

// Highly scientific metric: vibes

return false; // (for now)

}

}#AIHumor #AGIRisks #AIGovernance #ArtificialIntelligence #TechSatire #ChatGPT #SubstackOriginals